Programmes are moving faster than your data can keep up. New AI tools appear weekly, teams spin up apps to get work done, and decisions must be made today, not next quarter. The risk: leaders decide on stale, inconsistent, or opaque data. Lightweight data governance fixes this without slowing delivery. It’s not a committee; it’s a small set of controls that make data decision-grade and auditable in weeks.

The case for “lightweight” is strong. Poor data quality still drags value — Gartner estimates the average cost at $12.9M per year per organisation. Meanwhile, the average company juggles ~93 apps (100+ and rising), and large enterprises run 231 on average — fertile ground for conflicting truths. At the same time, “shadow AI” accelerates risk: employee use of public AI tools with corporate data rose 485% in one year. And new regulation such as the EU Data Act hardens expectations around access, portability, and interoperability.

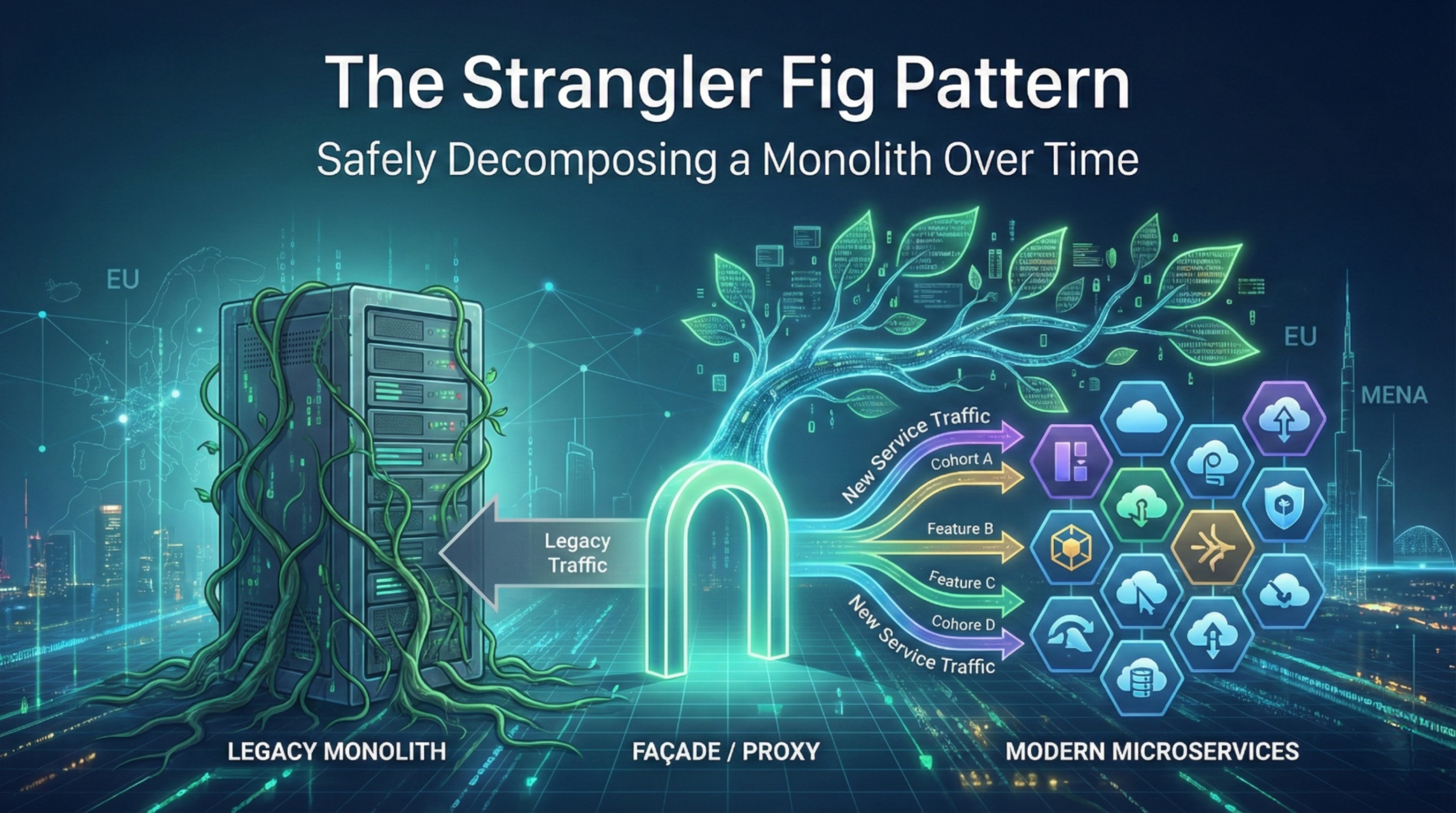

This article shows EU/MENA executives and programme managers how to put governance on rails — minimal rules, maximum clarity — so teams can ship faster and leaders can decide with confidence.

What “data you can decide with” means (and why speed needs lightweight governance)

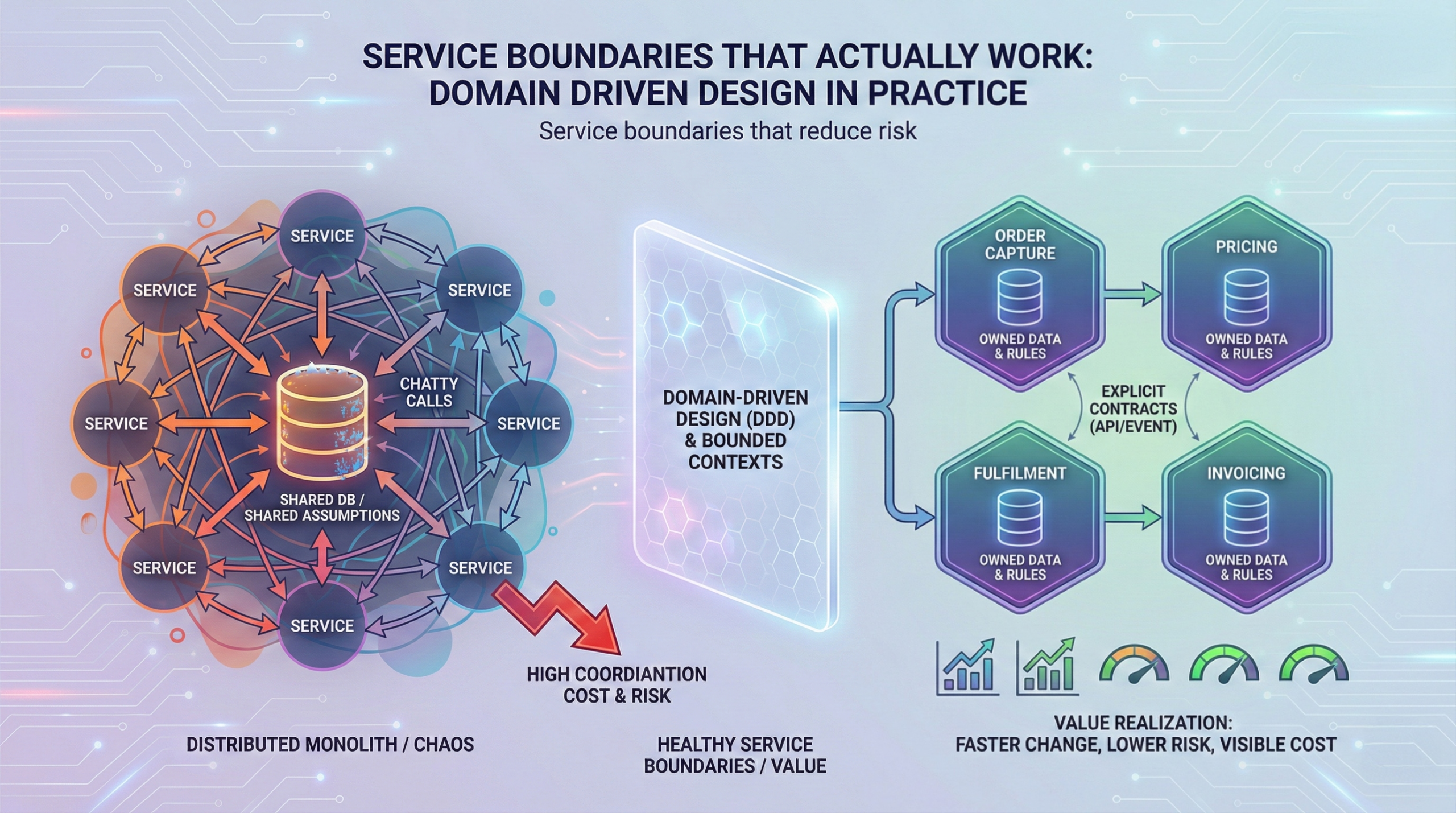

“Decision-grade” data has four traits: clear ownership, known quality, explainable lineage, and controlled access. In fast-moving programmes, these traits must be implemented with the least possible ceremony. Otherwise, governance becomes a brake.

The pressure is real. Poor data quality costs organisations $12.9M per year on average — think rework, missed opportunities, and compliance risk. In parallel, toolchains are sprawling: firms now run dozens to hundreds of applications, which multiplies sources of truth and metadata gaps. If we don’t standardise a few simple rules, every sprint adds entropy.

AI raises the bar again. Employees are past experimentation; they are actively pasting corporate content into public tools. One study saw a 485% YoY increase in corporate data entering AI tools, which is a governance issue as much as a security one. Lightweight controls (e.g., approved AI endpoints, logging, prompt/response retention policies) let you keep the speed without the data leaving your perimeter.

Regulation is also converging on data portability and interoperability. The EU Data Act creates rights and duties around access, sharing and switching providers — making lineage, permissions, and interface standards not optional but expected.

Lightweight data governance, defined.

A practical model has six controls you can stand up in 4–8 weeks across your programmes:

-

Owner on every table / metric. A named business owner, not just IT. Publish it in your data catalogue and dashboards.

-

Critical data elements (CDEs) list. 30–60 fields that drive decisions (KPIs, forecasts, commitments). Each has a data contract: definition, acceptable ranges, update frequency.

-

Quality rules with visible badges. Start with 5–10 rules per CDE (freshness, null rate, valid codes). Surface a green/amber/red badge on relevant dashboards.

-

Lineage you can trace in a meeting. One screen that shows source → transforms → metric, so programme leads can answer “where did this number come from?”

-

Least-privilege access, centrally managed. Roles and approval flows; no local Excel copies of master data.

-

Decision logs. For high-stakes choices, capture the data snapshot, caveats, and approver. This is priceless for audits and post-mortems.

Why this works: it targets the sources of waste. Data teams already spend heavy time on checks — in 2023, ~80% said they spend 25–50% of their time on security, quality, and related checks. Standardising the “few vital rules” cuts duplication while improving trust.

Running governance inside fast programmes (not around them)

Lightweight governance lives inside the sprint rhythm, not as a separate committee. Use this weekly cadence:

- Backlog hygiene (Monday): Add data governance tasks next to features (e.g., “define owner for InvoiceAmount” or “add freshness check for SalesOrders”).

- Daily flow: Engineers commit tests for quality rules; analysts update the CDE list when adding a metric.

- Demo (bi-weekly): Show the feature and its data badge. If amber/red, agree the fix and owner.

- Decision pack (rolling): For steering forums, include a one-pager with KPI deltas, data badges, and lineage snapshot.

Examples:

- EU consumer goods (portfolio dashboard). The PMO team ran weekly governance alongside delivery. Within five weeks, they moved five CDEs (sell-in, fill rate, on-shelf availability, returns, promo spend) from red/amber to green. Steering time dropped 20 minutes per meeting because provenance was visible.

- MENA energy (AI work orders). A predictive maintenance pilot stalled due to mistrust in parts data. The team appointed business owners for three CDEs, added two validation rules, and exposed lineage in the app. Acceptance criteria passed in Sprint 4; the model could be evaluated on value, not debate.

If your landscape feels too complex, you’re not wrong. The average company uses ~93 apps (100+ this year), and large firms run 231 apps on average — coordination needs simple standards. Leadership also matters: McKinsey’s 2025 survey finds employees ready for AI, with leadership the biggest barrier — governance is where leadership shows up.

Blueprint: The minimum viable operating model

People.

- Data product owners (business) for each CDE set.

- Data steward (part-time) per domain to curate the CDE list and rules.

- Platform engineer / analyst to automate checks and keep lineage current.

Process.

- Definition of Done includes: owner set, rule added, lineage updated, access reviewed.

- Red/amber escalation at the sprint demo — fix before scale.

- Decision log created when steering signs off a spend, risk, or forecast change.

Technology.

- A catalogue (even a lightweight one) to store owners/definitions.

- Automated quality (dbt tests, Great Expectations, or SQL checks).

- Lineage at transformation level (from ELT tool or BI model).

- Access via central identity — useful when you run dozens or hundreds of apps (Okta’s data shows the trend).

Compliance.

- Keep a one-pager mapping your controls to the EU Data Act: access rights, switching/portability, interoperability. It speeds legal review and vendor selection.

Averroa Perspective

We embed governance inside delivery using DRIVE™ (Design → Run → Improve → Validate → Expand) and staff it with ORBIT™ — the right expertise at the right time.

- Design: Identify CDEs, owners, and initial rules; map lineage for the first KPIs.

- Run: Build features and governance artefacts together; publish badges and decision logs.

- Improve: Triage amber/red items; automate more checks; refine data contracts.

- Validate: Prove decision quality (e.g., forecast error, cycle time, rework).

- Expand: Roll standards to adjacent teams and vendors.

Engagement tracks:

- Research & Innovation (2–6 weeks): Governance quick-scan, CDE shortlist, and a costed roadmap.

- Execution & Delivery: Sprint-based build of dashboards/models with governance baked in.

- Rescue & Support: Stabilise data debt, close lineage gaps, and run SLA-backed quality.

The evidence finance and risk care about

- Quality drag: Avg $12.9M/year cost of poor data quality. Implication: tackle CDEs first to cut waste quickly. Gartner

- App sprawl: Avg 93–100+ apps per org; 231 in large enterprises. Implication: centralise access and lineage. okta

Shadow AI growth: +485% corporate data entering public AI tools. Implication: approve endpoints and log usage. CFO Dive - Team time: ~80% of data teams spend 25–50% of their time on checks. Implication: codify and automate quality rules. Anaconda

- Leadership factor: Employees are ready for AI; leadership is the main barrier — governance is a leadership instrument. McKinsey & Company

- Regulation: The EU Data Act codifies access, switching and interoperability. Implication: align contracts and metadata now. Digital Strategy

Actionable Takeaways

- Name an owner on every KPI and CDE this month.

- Create a CDE list (30–60 fields) with definitions and acceptance rules.

- Add quality badges and lineage snapshots to your dashboards.

- Enforce least-privilege via central identity; remove offline copies of master data.

- Approve enterprise AI endpoints and log prompts/responses for critical use cases.

- Add governance items to the Definition of Done; demo the badge with the feature.

- Map your controls to EU Data Act obligations; brief legal and procurement.

- Run a monthly value review: fewer exceptions, faster decisions, audit wins.

If you want data you can decide with in under eight weeks, start a two-week diagnostic with Averroa’s AI & Analytics team.

References

- Gartner — Data Quality: Best Practices for Accurate Insights. Gartner

- Okta — Businesses at Work 2024 and 2025 updates. okta.com+2okta.com+2

- CFO Dive — Shadow AI surge threatens corporate data. CFO Dive

- Anaconda — State of Data Science 2023. Anaconda

- European Commission — Data Act overview and explainer. Digital Strategy+1

- McKinsey (2025) — AI in the workplace: empowering people…. McKinsey & Company

Programmes are moving faster than your data can keep up. New AI tools appear weekly, teams spin up apps to get work done, and decisions must be made today, not next quarter. The risk: leaders decide on stale, inconsistent, or opaque data. Lightweight data governance fixes this without slowing delivery. It’s not a committee; it’s a small set of controls that make data decision-grade and auditable in weeks.

The case for “lightweight” is strong. Poor data quality still drags value — Gartner estimates the average cost at $12.9M per year per organisation. Meanwhile, the average company juggles ~93 apps (100+ and rising), and large enterprises run 231 on average — fertile ground for conflicting truths. At the same time, “shadow AI” accelerates risk: employee use of public AI tools with corporate data rose 485% in one year. And new regulation such as the EU Data Act hardens expectations around access, portability, and interoperability.

This article shows EU/MENA executives and programme managers how to put governance on rails — minimal rules, maximum clarity — so teams can ship faster and leaders can decide with confidence.

What “data you can decide with” means (and why speed needs lightweight governance)

“Decision-grade” data has four traits: clear ownership, known quality, explainable lineage, and controlled access. In fast-moving programmes, these traits must be implemented with the least possible ceremony. Otherwise, governance becomes a brake.

The pressure is real. Poor data quality costs organisations $12.9M per year on average — think rework, missed opportunities, and compliance risk. In parallel, toolchains are sprawling: firms now run dozens to hundreds of applications, which multiplies sources of truth and metadata gaps. If we don’t standardise a few simple rules, every sprint adds entropy.

AI raises the bar again. Employees are past experimentation; they are actively pasting corporate content into public tools. One study saw a 485% YoY increase in corporate data entering AI tools, which is a governance issue as much as a security one. Lightweight controls (e.g., approved AI endpoints, logging, prompt/response retention policies) let you keep the speed without the data leaving your perimeter.

Regulation is also converging on data portability and interoperability. The EU Data Act creates rights and duties around access, sharing and switching providers — making lineage, permissions, and interface standards not optional but expected.

Lightweight data governance, defined.

A practical model has six controls you can stand up in 4–8 weeks across your programmes:

-

Owner on every table / metric. A named business owner, not just IT. Publish it in your data catalogue and dashboards.

-

Critical data elements (CDEs) list. 30–60 fields that drive decisions (KPIs, forecasts, commitments). Each has a data contract: definition, acceptable ranges, update frequency.

-

Quality rules with visible badges. Start with 5–10 rules per CDE (freshness, null rate, valid codes). Surface a green/amber/red badge on relevant dashboards.

-

Lineage you can trace in a meeting. One screen that shows source → transforms → metric, so programme leads can answer “where did this number come from?”

-

Least-privilege access, centrally managed. Roles and approval flows; no local Excel copies of master data.

-

Decision logs. For high-stakes choices, capture the data snapshot, caveats, and approver. This is priceless for audits and post-mortems.

Why this works: it targets the sources of waste. Data teams already spend heavy time on checks — in 2023, ~80% said they spend 25–50% of their time on security, quality, and related checks. Standardising the “few vital rules” cuts duplication while improving trust.

Running governance inside fast programmes (not around them)

Lightweight governance lives inside the sprint rhythm, not as a separate committee. Use this weekly cadence:

- Backlog hygiene (Monday): Add data governance tasks next to features (e.g., “define owner for InvoiceAmount” or “add freshness check for SalesOrders”).

- Daily flow: Engineers commit tests for quality rules; analysts update the CDE list when adding a metric.

- Demo (bi-weekly): Show the feature and its data badge. If amber/red, agree the fix and owner.

- Decision pack (rolling): For steering forums, include a one-pager with KPI deltas, data badges, and lineage snapshot.

Examples:

- EU consumer goods (portfolio dashboard). The PMO team ran weekly governance alongside delivery. Within five weeks, they moved five CDEs (sell-in, fill rate, on-shelf availability, returns, promo spend) from red/amber to green. Steering time dropped 20 minutes per meeting because provenance was visible.

- MENA energy (AI work orders). A predictive maintenance pilot stalled due to mistrust in parts data. The team appointed business owners for three CDEs, added two validation rules, and exposed lineage in the app. Acceptance criteria passed in Sprint 4; the model could be evaluated on value, not debate.

If your landscape feels too complex, you’re not wrong. The average company uses ~93 apps (100+ this year), and large firms run 231 apps on average — coordination needs simple standards. Leadership also matters: McKinsey’s 2025 survey finds employees ready for AI, with leadership the biggest barrier — governance is where leadership shows up.

Blueprint: The minimum viable operating model

People.

- Data product owners (business) for each CDE set.

- Data steward (part-time) per domain to curate the CDE list and rules.

- Platform engineer / analyst to automate checks and keep lineage current.

Process.

- Definition of Done includes: owner set, rule added, lineage updated, access reviewed.

- Red/amber escalation at the sprint demo — fix before scale.

- Decision log created when steering signs off a spend, risk, or forecast change.

Technology.

- A catalogue (even a lightweight one) to store owners/definitions.

- Automated quality (dbt tests, Great Expectations, or SQL checks).

- Lineage at transformation level (from ELT tool or BI model).

- Access via central identity — useful when you run dozens or hundreds of apps (Okta’s data shows the trend).

Compliance.

- Keep a one-pager mapping your controls to the EU Data Act: access rights, switching/portability, interoperability. It speeds legal review and vendor selection.

Averroa Perspective

We embed governance inside delivery using DRIVE™ (Design → Run → Improve → Validate → Expand) and staff it with ORBIT™ — the right expertise at the right time.

- Design: Identify CDEs, owners, and initial rules; map lineage for the first KPIs.

- Run: Build features and governance artefacts together; publish badges and decision logs.

- Improve: Triage amber/red items; automate more checks; refine data contracts.

- Validate: Prove decision quality (e.g., forecast error, cycle time, rework).

- Expand: Roll standards to adjacent teams and vendors.

Engagement tracks:

- Research & Innovation (2–6 weeks): Governance quick-scan, CDE shortlist, and a costed roadmap.

- Execution & Delivery: Sprint-based build of dashboards/models with governance baked in.

- Rescue & Support: Stabilise data debt, close lineage gaps, and run SLA-backed quality.

The evidence finance and risk care about

- Quality drag: Avg $12.9M/year cost of poor data quality. Implication: tackle CDEs first to cut waste quickly. Gartner

- App sprawl: Avg 93–100+ apps per org; 231 in large enterprises. Implication: centralise access and lineage. okta

Shadow AI growth: +485% corporate data entering public AI tools. Implication: approve endpoints and log usage. CFO Dive - Team time: ~80% of data teams spend 25–50% of their time on checks. Implication: codify and automate quality rules. Anaconda

- Leadership factor: Employees are ready for AI; leadership is the main barrier — governance is a leadership instrument. McKinsey & Company

- Regulation: The EU Data Act codifies access, switching and interoperability. Implication: align contracts and metadata now. Digital Strategy

Actionable Takeaways

- Name an owner on every KPI and CDE this month.

- Create a CDE list (30–60 fields) with definitions and acceptance rules.

- Add quality badges and lineage snapshots to your dashboards.

- Enforce least-privilege via central identity; remove offline copies of master data.

- Approve enterprise AI endpoints and log prompts/responses for critical use cases.

- Add governance items to the Definition of Done; demo the badge with the feature.

- Map your controls to EU Data Act obligations; brief legal and procurement.

- Run a monthly value review: fewer exceptions, faster decisions, audit wins.

If you want data you can decide with in under eight weeks, start a two-week diagnostic with Averroa’s AI & Analytics team.

References

- Gartner — Data Quality: Best Practices for Accurate Insights. Gartner

- Okta — Businesses at Work 2024 and 2025 updates. okta

- CFO Dive — Shadow AI surge threatens corporate data. CFO Dive

- Anaconda — State of Data Science 2023. Anaconda

- European Commission — Data Act overview and explainer. Digital Strategy

- McKinsey (2025) — AI in the workplace: empowering people…. McKinsey & Company